The Georgia Department of Education released Student Growth Percentile (SGP) data yesterday. SGPs describe the amount of growth a student has demonstrated on the CRCTs relative to academically-similar students from across the state. Go here to find out more about Student Growth Percentile and how it’s calculated.

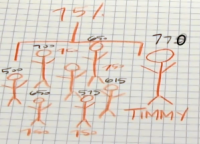

Your school’s Median SGP:

Austin ES, Briarlake ES, Chesnut ES, Dunwoody ES, Evansdale ES, Fernbank ES, Hawthorne ES, Huntley Hills ES, Kingsley ES, Kittredge Magnet, Midvale ES, Montgomery ES, Livsey ES, Vanderlyn ES, Wadsworth Magnet

Look up district and school SGP data across the state here. Educators have access to detailed SGP data for their students and teachers through the Statewide Longitudinal Data System (SLDS). Note: This data is based on growth from FY12-FY13. FY15 school year starts next month, so the benefits of this stale data remain to be seen.

SGPs are used as a measure of student progress in the College and Career Ready Performance Index (CCRPI). SGPs are one of multiple measures used to provide an indication of teacher and leader effectiveness in the Teacher and Leader Keys Effectiveness Systems (TKES and LKES).

Below are DeKalb’s Median SGP scores. With a few exceptions, the SGP data suggests DeKalb Schools’ students are falling behind faster than the other students across the state.

| Grade | Subject | Median SGP | |

| DeKalb Schools | 4 | English/Lang. Arts | 45 |

| DeKalb Schools | 4 | Math | 40 |

| DeKalb Schools | 4 | Reading | 57 |

| DeKalb Schools | 4 | Science | 49 |

| DeKalb Schools | 4 | Soc. Stud. | 40 |

| DeKalb Schools | 5 | English/Lang. Arts | 44 |

| DeKalb Schools | 5 | Math | 43 |

| DeKalb Schools | 5 | Reading | 44 |

| DeKalb Schools | 5 | Science | 38 |

| DeKalb Schools | 5 | Soc. Stud. | 44 |

| DeKalb Schools | 6 | English/Lang. Arts | 44 |

| DeKalb Schools | 6 | Math | 54 |

| DeKalb Schools | 6 | Reading | 59 |

| DeKalb Schools | 6 | Science | 42 |

| DeKalb Schools | 6 | Soc. Stud. | 46 |

| DeKalb Schools | 7 | English/Lang. Arts | 54 |

| DeKalb Schools | 7 | Math | 32 |

| DeKalb Schools | 7 | Reading | 50 |

| DeKalb Schools | 7 | Science | 48 |

| DeKalb Schools | 7 | Soc. Stud. | 42 |

| DeKalb Schools | 8 | English/Lang. Arts | 40 |

| DeKalb Schools | 8 | Math | 38 |

| DeKalb Schools | 8 | Reading | 50 |

| DeKalb Schools | 8 | Science | 44 |

| DeKalb Schools | 8 | Soc. Stud. | 45 |

“Extremism in defense of liberty is no vice. Moderation in pursuit of justice is no virtue.”

“Extremism in defense of liberty is no vice. Moderation in pursuit of justice is no virtue.”

This illustrates one of the well-publicized problems with student growth models. The state uses a pretty simple formula for calculating growth. That formula, indeed, produces a number whether that student is high, middle or low achieving. That does not mean those numbers are comparable. In other words, does a 17% growth rate for a high-achieving student mean the same thing as it would for a low-achieving student?

For example, I had a student last year who had a 17% growth rate. The previous year he had missed one out of 60 and the year before that he had missed two. (The state looks at the two previous years’ average.) On my test he missed three questions out of 60. According to the state, his score in my class was better than 17% of the students in his cohort (all the students statewide who had similar incoming scores).

Fair enough… but does that compare with a student who also got a 17% growth rate, but whose cohort was in the range of 30 misses out of 60? The higher-achieving cohorts are much more competitive; it’s students more likely to be motivated to do well and already closer to their full potential.

At the upper end of the spectrum, one or two lucky guesses or unlucky guesses can make for big swings in the growth rate. I had a student with the same incoming numbers who missed one question and her growth rate was 58%. Another student of mine, with the same incoming numbers had a growth rate of 85%. He had a perfect score.

That’s another interesting anomaly on the high end. I had five students get perfect scores. That’s the very best they could possibly have done. Their mean growth rate was 89%.

But here’s the real problem. If you teach higher-level students, you should be expanding well beyond a narrow interpretation of the standards. You should be enriching your curriculum to stretch your students as far as they can go. But that enrichment will not show up on a test. With the growth model, teachers of students with high incoming scores cannot afford for their students to miss any questions.

So instead of enriching, we are being pushed to drill over the “assessable” part of the standards. Instead of plowing new ground, the system is forcing us to go over and over the same material in the hopes of perfect scores.

I’m someone who believes that we can learn a lot from analyzing test results. But I also believe, and professional statisticians agree, that we are misusing the data with grossly, overly-simplistic analysis. Worse, it is pushing teachers, not to improve their craft for the sake of their students but to gin up their numbers for the sake of their own career.

@Hall County Teacher

As part of the validation effort of the growth model for the CRCT and EOCTs, special emphasis was given to whether any ceiling effects existed on the assessments that led to biased growth results. Those analyses, including review by nationally recognized technical experts, led to the conclusion they did not.

To answer the specific question posed in the posting: Yes, a student growth percentile of 17 for a high-achieving student does mean the same thing as a student growth percentile of 17 for a low-achieving student. Both students grew more than 17% of their academic peers.

The state assessments go through rigorous quality control procedures, including review by nationally recognized technical experts to ensure the scores produced are technically sound and appropriate for the uses established by the state. SGP calculations are sensitive to the psychometric properties of the assessments used in the calculations.

With that said, however, it is important that we acknowledge that no measurement instrument is perfect for all purposes and uses. In an effort to maximize the information around the cut scores of 800 and 850 (to ensure students are accurately classified as meeting or exceeding expectations), there is less information at both extremes of the scale (i.e., very low achieving students and very high achieving students). Given the historical and legislated uses of the test, this was a necessary trade-off. Importantly, special attention will be given to maximizing information along the entire achievement continuum during the development of Georgia Milestones – specifically because the scores will be used in the growth model and inform the educator effectiveness measures.

The growth model calculation uses the scale scores produced by the state assessments. SGPs utilize quantile regression with b-spline smoothing to create growth norms that model the relationship between students’ current and prior achievement scores. While the final metric produced – a percentile – is simple and straightforward to interpret (one of the advantages of the model), the calculations underlying it are sophisticated and among the most technically sound methods available today. That is why it has been adopted by approximately 20 states.

As stated earlier, there is no such thing as a perfect test or a perfect growth model – both will have measurement error associated. It is important that such error is minimized, to the extent it can be, and that it is taken into consideration when interpreting the data, be it a test score or the growth measure. Additionally, there are always limitations and trade-offs. As a state, we strive to make informed decisions to develop and implement the best instruments and policies possible given the purposes and uses of the resulting data. Part of that process includes having technical experts and Georgia educators at the table for the development and implementation of these programs.

Any time the SGP data is used – whether that is for student-level diagnostics, educator effectiveness, or school accountability – it should be considered in connection with other information. For student diagnostics, we encourage the use of test scores, classroom performance, and teacher observations, to name a few, along with the SGP results to provide a more complete picture of student performance.

For educator evaluation, SGPs are one of multiple measures (along with Student Learning Objectives, Teacher Assessment on Performance Standards, Leader Assessment on Performance Standards, achievement gap, and surveys) used to provide a signal of effectiveness.

With the Teacher Keys Effectiveness System, teachers receive a growth rating of 1-4 based on the mean of their students’ growth percentiles. The highest rating of 4 is given with a mean growth percentile above 65 (meaning that, on average, the teacher’s students are demonstrating high growth). For school accountability (CCRPI), SGPs are one of many indicators used to describe the performance of a school.

We assert that SGPs, while not perfect, are a valid and reliable measure of student growth that provides valuable information to enhance our understanding of student performance in Georgia. For that reason, we believe it is important to make this information available to educators, parents, and the public.